Sep 21, 2018

By Victoria Grdina

The University of Nebraska–Lincoln will be teaming up with The National Science Foundation and several other research institutions to make major contributions to the high-energy physics field using high-powered computing tools and software innovation.

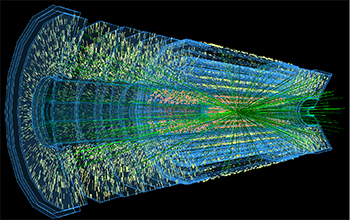

This month, NSF launched the Institute for Research and Innovation in Software for High-Energy Physics (IRIS-HEP), a $25 million collaborative effort to manage the data collected from the upcoming High-Luminosity Large Hadron Collider (HL-LHC), the world's most powerful particle accelerator. LHC first observed the Higgs boson particle in 2012, and this next upgrade could lead to more breakthroughs in antimatter research.

"High-energy physics had a rush of discoveries and advancements in the 1960s and 1970s that led to the Standard Model of particle physics, and the Higgs boson was the last missing piece of that puzzle," said Peter Elmer of Princeton University, the NSF principal investigator for IRIS-HEP. "We are now searching for the next layer of physics beyond the Standard Model. The software institute will be key to getting us there.”

The IRIS-HEP project will be co-funded within NSF by the Office of Advanced Cyberinfrastructure in the Directorate for Computer and Information Science and Engineering, and by the Division of Physics in the Mathematical and Physical Sciences Directorate. Participating researchers will receive $5 million per year for five years to develop new software and train its users.

The project will continue over the next eight years, and by 2026, HL-LHC is expected to have increased its luminosity tenfold—which means it will be able to produce around 1 billion particle collisions per second. Capturing, storing, and analyzing so much more data efficiently will require new computing solutions.

"Even now, physicists just can't store everything that the LHC produces," said Bogdan Mihaila, the NSF program officer overseeing the IRIS-HEP award. "Sophisticated processing helps us decide what information to keep and analyze, but even those tools won't be able to process all of the data we will see in 2026. We have to get smarter and step up our game. That is what the new software institute is about."

Much of that data and software will be stored and developed in the Holland Computing Center (HCC). Nebraska will be involved with the project’s data-related research and development areas.

“We’ll be focusing how to distribute data, make the data more compact, how to more efficiently process the data,” said research associate professor Brian Bockelman. “There are really interesting data challenges in terms of getting the data to the right place, minimizing the number of copies and redundancy, and making it smaller.”

HCC has housed a significant amount of storage and computing for the LHC experiment known as CMS for the last 13 years. The current total storage capacity is over 6.5 petabytes and over 7,200 compute cores. Not only does HCC have the capacity to store large amounts of data, but it can also move data at a rate of up to 100 gigabits per second. Through its partnership with the Open Science Grid, an international consortium of research institutions, HCC is also able to share these resources with other organizations around the world. Its 35,000 other compute cores are available for their use when idle in Nebraska.

“Borrowing somebody else’s computing is a bit like empty seats on an airplane. The plane will fly anyway if no one is using it, but borrowing storage is like borrowing real estate,” Bockelman said. “It’s harder to find storage and it’s very expensive, so it will be challenging, but it’s an area where we can make more computer science connections.”

Some of those connections include partners at collaborating institutions include Cornell University, Indiana University, Massachusetts Institute of Technology, New York University, Princeton University, Stanford University, UC Berkeley, UC San Diego, University of Chicago, University of Cincinnati, UC Santa Cruz, University of Illinois at Urbana-Champaign, University of Michigan-Ann Arbor, University of Puerto Rico-Mayaguez, University of Washington, and University of Wisconsin-Madison.

Bockelman said in addition to expanding collaboration opportunities, he’s interested in expanding on some of HCC’s previous projects for new research endeavors.

“I really enjoy the two pieces Nebraska is involved in with scaling data systems and making them run at much higher efficiency,” Bockelman said. “All that’s left to do now is a whole lot of work.”